Mitigating Framing Bias with Polarity Minimization Loss: Experiments

:::info

This paper is available on arxiv under CC BY-NC-SA 4.0 DEED license.

Authors:

(1) Yejin Bang, Centre for Artificial Intelligence Research (CAiRE), The Hong Kong University of Science and Technology;

(2) Nayeon Lee, Centre for Artificial Intelligence Research (CAiRE), The Hong Kong University of Science and Technology;

(3) Pascale Fung, Centre for Artificial Intelligence Research (CAiRE), The Hong Kong University of Science and Technology.

:::

Table of Links

Abstract and Intro

Related Work

Approach

Experiments

Conclusion

Limitations, Ethics Statement and References

A. Experimental Details

B. Generation Results

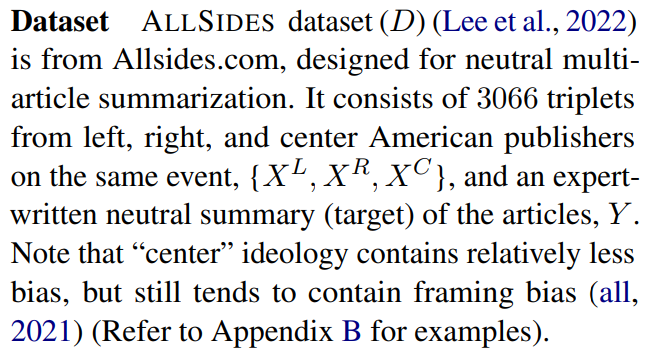

4. Experiments

4.1. Setup

\

4.2. Models

Baselines We compare with off-the-shelf multidocument summarization (MDS) models trained on Multi-news dataset (Fabbri et al., 2019) (BARTMULTI (Lewis et al., 2019) and PEGASUSMULTI (Zhang et al., 2019a)) as baselines. Those models have achieved high performance in MDS, which can also be applied in summarizing polarized articles. However, these models do not have any learning about framing bias removal or neutral writing. We also compare with the state-of-theart models (BARTNEUSFT and BARTNEUSFT-T) (Lee et al., 2022) that are fine-tuned with ALLSIDES dataset. BARTNEUSFT is fine-tuned only with articles meanwhile BARTNEUSFT-T additionally leverages titles of each article. We additionally report PEGASUSNEUSFT. Simply fine-tuning may not be effective enough to learn about framing bias. Thus, we will demonstrate how the polarity minimization loss can effectively mitigate framing bias compared to baseline and SOTA models.

\

\

\

\

4.3. Results

\

\

Effective learning with extreme polarities We investigate that polarity minimization between extreme ends (left, right) is more effective than the mixture with a center media outlet. This is because left and right-wing ideologies are the opposite ends that can train models more effectively about extreme ends than center media outlets although center media is not completely free of bias. Qualitative analysis results align with the quantitative measures. For instance, as illustrated in Table 2, the polarity minimized models LR-INFO and LRC-AROUSAL both could summarize with the essential information out of polarized input articles. Especially LR-INFO, the lowest biased model, it could even use a more neutral choice of word (e.g., “protests” instead of “riots” same to target Y).

\

4.4. Analysis

\

\

Welcome to Billionaire Club Co LLC, your gateway to a brand-new social media experience! Sign up today and dive into over 10,000 fresh daily articles and videos curated just for your enjoyment. Enjoy the ad free experience, unlimited content interactions, and get that coveted blue check verification—all for just $1 a month!

Account Frozen

Your account is frozen. You can still view content but cannot interact with it.

Please go to your settings to update your account status.

Open Profile Settings